Worker¶

A Worker Resource in DataOS is a long-running process responsible for performing specific tasks or computations indefinitely. To understand the key characteristics and what differentiates a Worker from a Workflow and a Service, refer to the following link: Core Concepts.

Worker in the Data Product Lifecycle

Worker Resources are integral to the build phase in the Data Product Lifecycle. It forms part of the 'code' component in the data product definition and is essential for carrying out prolonged transformations. They are particularly useful when your transformation involves:

- Indefinite Execution: Continuously processing or transforming stream or batch data without a defined endpoint. For example, a Worker processing live sensor data from IoT devices and storing it in a dataset.

- Child/ Processes: Creating child processes for a main process, allowing for modular and scalable task execution. Employing a Worker to handle background jobs in a web application.

- Independent Processing: Performing long-running transformations without requiring external network communication. Example, employing a Worker to continuously monitor independent data streams.

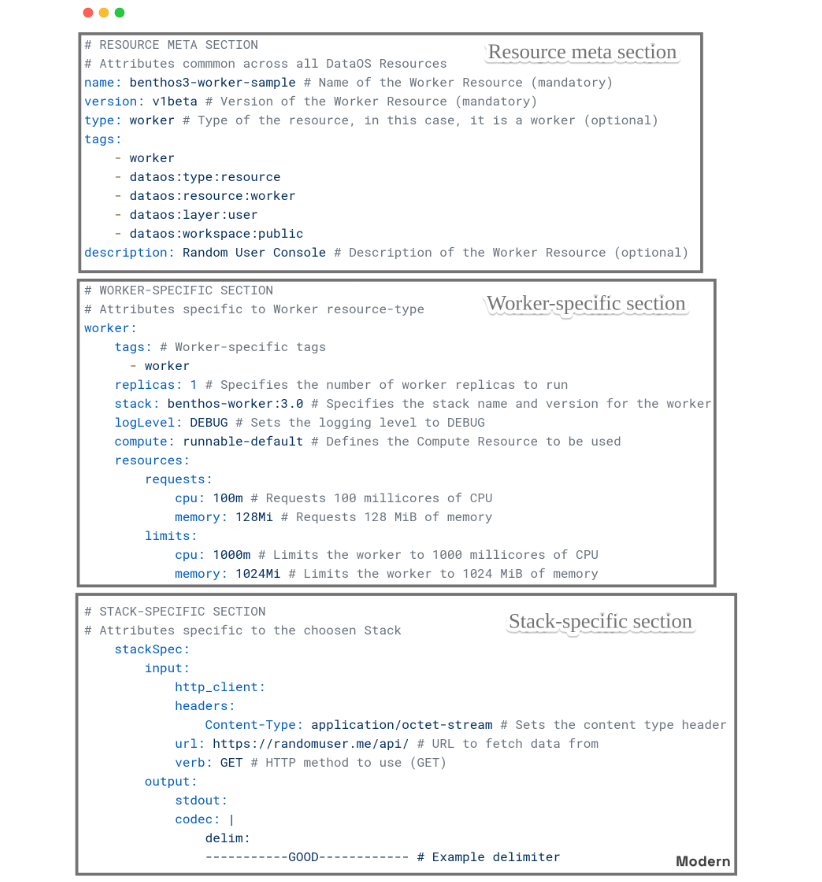

Structure of Worker manifest¶

# RESOURCE META SECTION

# Attributes commmon across all DataOS Resources

name: benthos3-worker-sample # Name of the Worker Resource (mandatory)

version: v1beta # Version of the Worker Resource (mandatory)

type: worker # Type of the resource, in this case, it is a worker (optional)

tags:

- worker

- dataos:type:resource

- dataos:resource:worker

- dataos:layer:user

- dataos:workspace:public

description: Random User Console # Description of the Worker Resource (optional)

# WORKER-SPECIFIC SECTION

# Attributes specific to Worker resource-type

worker:

tags: # Worker-specific tags

- worker

replicas: 1 # Specifies the number of worker replicas to run

stack: benthos-worker:3.0 # Specifies the stack name and version for the worker

logLevel: DEBUG # Sets the logging level to DEBUG

compute: runnable-default # Defines the Compute Resource to be used

resources:

requests:

cpu: 100m # Requests 100 millicores of CPU

memory: 128Mi # Requests 128 MiB of memory

limits:

cpu: 1000m # Limits the worker to 1000 millicores of CPU

memory: 1024Mi # Limits the worker to 1024 MiB of memory

# STACK-SPECIFIC SECTION

# Attributes specific to the choosen Stack

stackSpec:

input:

http_client:

headers:

Content-Type: application/octet-stream # Sets the content type header

url: https://randomuser.me/api/ # URL to fetch data from

verb: GET # HTTP method to use (GET)

output:

stdout:

codec: |

delim:

-----------GOOD------------ # Example delimiter

First Steps¶

Worker Resource in DataOS can be created by applying the manifest file using the DataOS CLI. To learn more about this process, navigate to the link: First steps.

Configuration¶

Workers can be configured to autoscale and match varying workload demands, reference pre-defined Secrets and Volumes, and more. The specific configurations may vary depending on the use case. For a detailed breakdown of the configuration options and attributes, please refer to the documentation: Attributes of Worker manifest.