Integrating Data Products with Tableau Desktop¶

The following document outline the process for integrating Tableau Desktop with DataOS.

The semantic model can be integrated with Tableau Cloud using the following ways:

-

Using Data Product Hub (Recommended – GUI based): Add connection details through an intuitive graphical interface.

-

Using cURL command (Command-Line based): Add connection details in the terminal via cURL command.

Using Data Product Hub (Recommended - GUI based)¶

Follow the below steps:

Step 1: Navigate to the Data Product Hub¶

Access the Home Page of DataOS. From the home page, navigate to the Data Product Hub to explore the various Data Products available within the platform.

.png)

Step 2: Browse and Select a Data Product¶

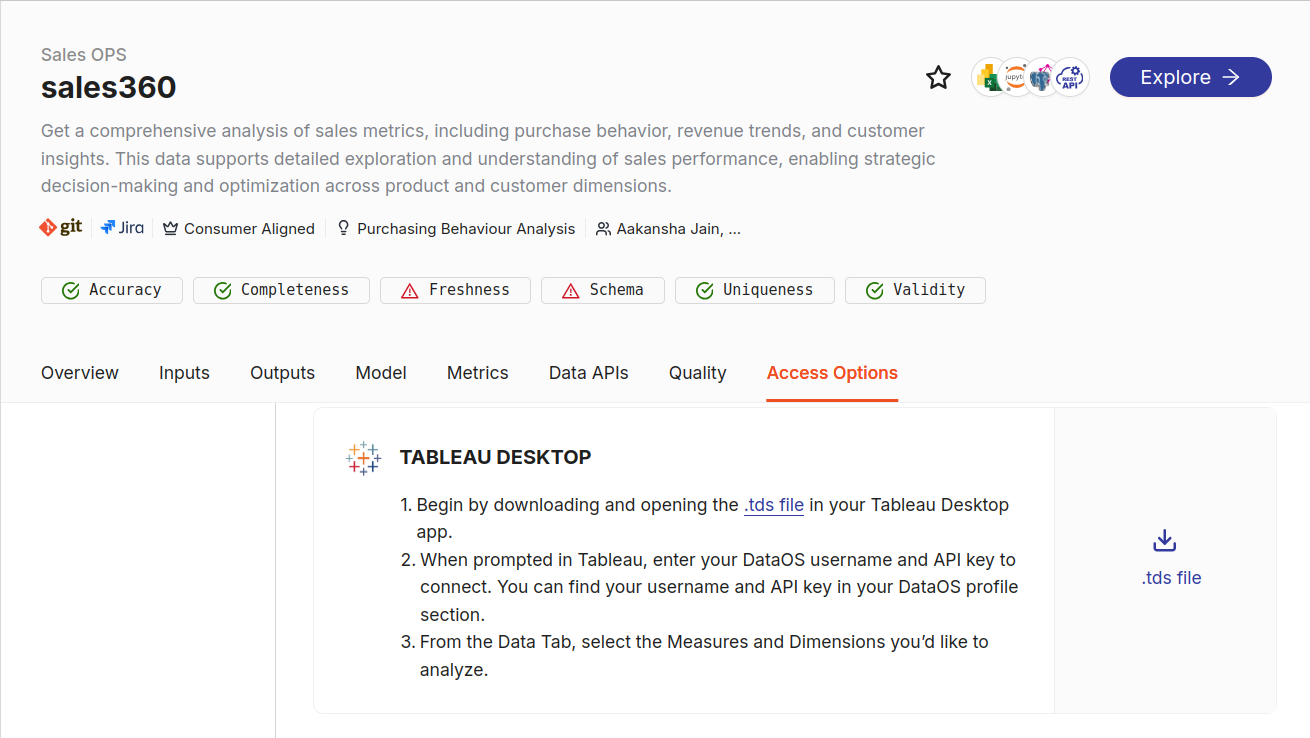

In the Data Product Hub, users can browse through a comprehensive list of available Data Products. To integrate with Tableau, click on a specific Data Product of interest. For instance Sales360

.png)

Step 3: Access Integration Options¶

After selecting Sales360 Data Product, navigate to the Access Options tab. Within this tab, various methods to access and interact with the Data Product can be found, including the BI Sync tab, where Tableau Desktop is located.

Step 4: Download and Extract the .tds File¶

Download the .tds file and extract the zip file into Tableau's default repository, typically located at My Tableau Repository\Datasources\.

.png)

Step 5: Proceed with Data Product¶

Click on the Data Product to continue.

.png)

Step 6: Enter Credentials¶

Users will be prompted to enter their username and API key.

.png)

Step 7: Visualize Data in Tableau Desktop¶

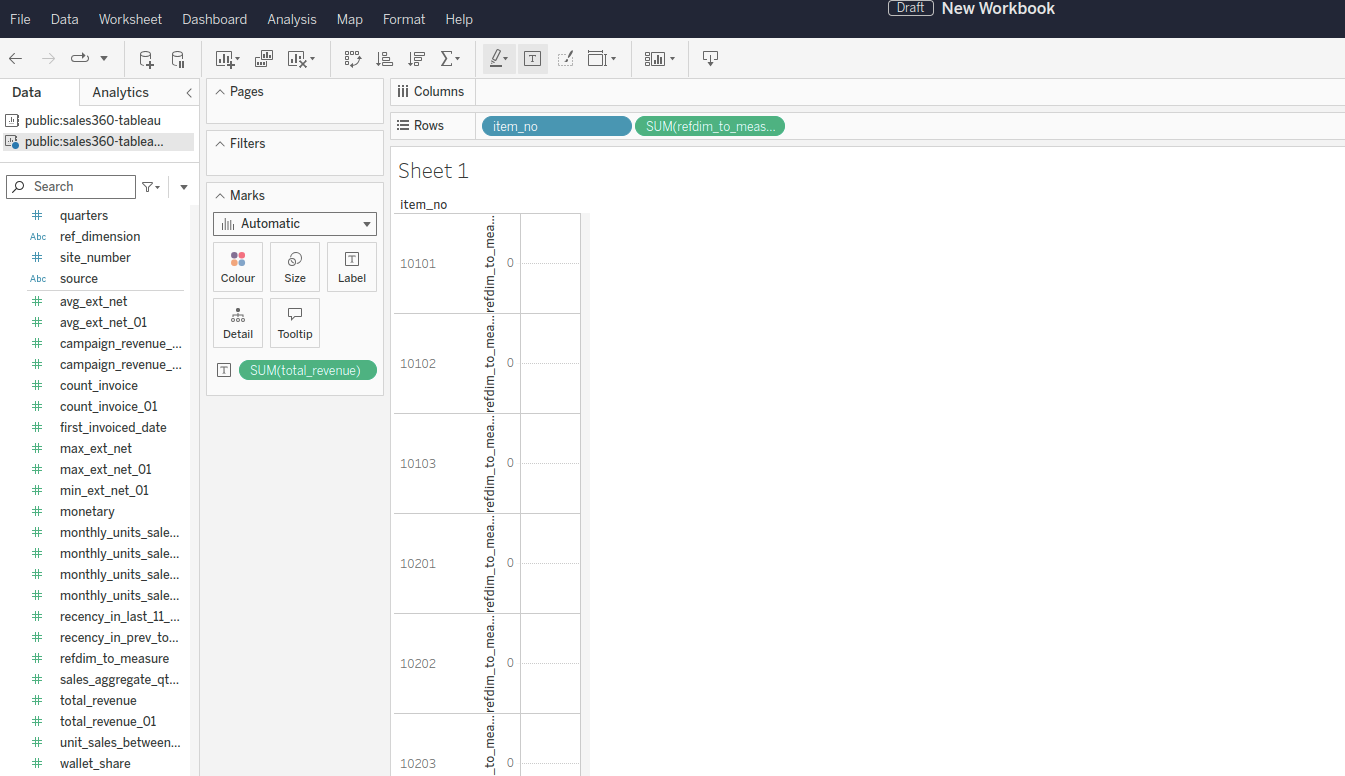

Once the connection is established, users can begin visualizing the Data Product in Tableau Desktop.

.png)

Step 8: Publishing workbook/dashboard¶

The publisher can embed their credentials (DataOS username and API Token) or ask users to provide credentials whenever they want to access the published Workbook/Sheet/Dashboard. If the publisher has chosen to ‘Embed password for data source’, users can access the published workbook and dashboard without providing credentials.

Note: Once the credentials are embedded, they cannot be accessed. You need to overwrite and ‘publish-as’ the workbook to reconfigure the embedding password optionality.

Using cURL command (Command-Line based)¶

Prerequisites¶

- Curl: Ensure that

curlis installed on the system. For Windows systems,curl.exemay be necessary. - Lens API endpoint: The API endpoint provided by Lens to sync semantic model, enabling integration with Tableau.

- Access credentials: Access credentials such as username, password, project name etc., are required for Tableau.

- DataOS API key: Ensure the DataOS API key is available. Get it by using the following command:

Syntax command¶

To sync the semantic model with Tableau, copy the payload below and replace the placeholders with appropriate values:

Command configuration details¶

| Placeholder | Description |

|---|---|

<DATAOS_FQDN> |

The fully qualified domain name (FQDN) of your DataOS environment. Example: gentle-akita.dataos.app |

<WORKSPACE_NAME> |

The DataOS workspace where your Lens (semantic model) resides. Example: public, sandbox |

<LENS_NAME> |

The name of the Lens (semantic model) to sync. Example: sales-analysis |

<APIKEY> |

Your DataOS API key for authentication. Obtain this from your DataOS Profile > API Key section. |

Exploring the Data Product on Tableau Cloud¶

Once the sync is successful, the data source is published to the Tableau cloud/server:

Step 1: Log in to Tableau Cloud¶

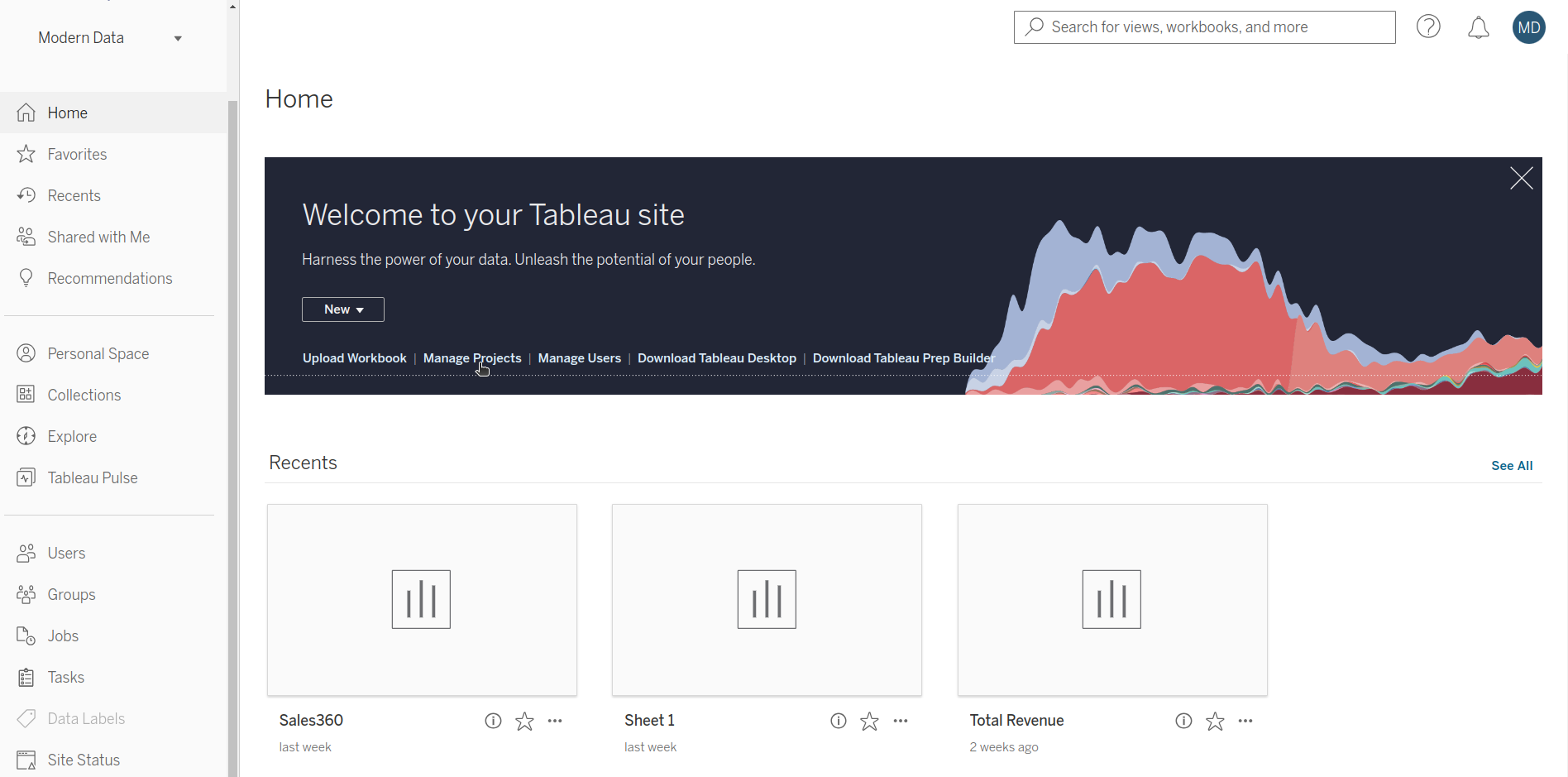

Users should log in to Tableau Cloud using the same credentials of Tableau. This will redirect to the Tableau Cloud home page.

Step 2: Manage Projects¶

Supported data types¶

| Category | Data Type | Support Status | Recommended Approach |

|---|---|---|---|

| Dimension | time |

Supported | NA |

| Dimension | string |

Supported | NA |

| Dimension | number |

Supported | NA |

| Dimension | boolean |

Supported | NA |

| Measure | max |

Supported | NA |

| Measure | min |

Supported | NA |

| Measure | number |

Supported | NA |

| Measure | sum |

Supported | NA |

| Measure | count |

Supported | NA |

| Measure | boolean |

Auto-converts to Dimension | NA |

| Measure | string |

Auto-converts to Dimension | NA |

| Measure | time |

Auto-converts to Dimension | NA |

| Measure | avg |

Not Supported | Option 1: To use measure of type ‘avg’, define an additional measure of type 'count' in that entity: name: count type: count sql: '1' Option 2: Use measure of type 'number' and define average logic in SQL: measures: - name: total_accounts type: number sql: "avg({accounts})” |

| Measure | count_distinct |

Not Supported | Option 1: To use measure of type ‘count_distinct’, additionally define a measure of type 'count' in that entity: name: count type: count sql: '1' Option 2: Or, use measure of type 'number' and define logic for count_distinct in SQL: measures: - name: total_accounts type: number sql: "count(distinct({accounts}))” |

| Measure | count_distinct_approx |

Not Supported | NA |

| Rolling Window | - | Supported | NA |

Important considerations for Tableau Integration¶

1. Handling Entities without Relationships: An error will occur during synchronization if any entity in the data model lacks a defined relationship. To resolve this issue, the entity can be hidden to avoid synchronization errors.

2. Live connection: The connection between the Lens semantic layer and Tableau Cloud is live meaning that any changes to the underlying data or measure logic will automatically be reflected in Tableau.

3. Schema changes: If there are schema updates, such as adding new dimensions or measures, the integration steps will need to be repeated to incorporate these changes into Tableau.

4. Avoiding cyclic dependencies: Tableau does not support cyclic dependencies within data models. To prevent integration issues, it is essential to ensure that the data model is free of cyclic dependencies prior to syncing with Tableau.

5. Visualization with multiple data sources: You cannot build a visualization that incorporates data from multiple data sources. For live connections, Tableau does not support data blending. Only a single data source can be used to create a visualization.

6. Centralized management: All data sources should be managed and published by the admin on the server, with everyone else using this source.

7. Single authority for Desktop publications: If data sources are published via Tableau Desktop, ensure that all sources are published by a single authority to avoid multiple data source conflicts on the server.

8. Row limit: The Lens API has a maximum return limit of 50,000 rows per request. To obtain additional data, it is necessary to set an offset. This row limit is in place to manage resources efficiently and ensure optimal performance.

9. Selection: It is important to select fields from tables that are directly related or logically joined, as the system does not automatically identify relationships between tables through transitive joins. Selecting fields from unrelated tables may result in incorrect or incomplete results.

10. Parameter Action: Action filters can be defined on measures/dimensions to filter visualizations effectively.

11. Default chart types: All default chart types provided by Tableau can be plotted and visualized without issues.

12. Rolling Window Measure: For querying a rolling window measure, it is necessary to provide a time dimension and apply a date range filter to this time dimension. When querying a rolling window measure, follow these steps:

- Select the rolling window measure.

- Select the time dimension.

- To define granularity, right-click on the selected time dimension and set granularity (choose a granularity where the complete time, along with the year, is shown).

- Add the time dimension to the filter, and define the range filter.

Error handling¶

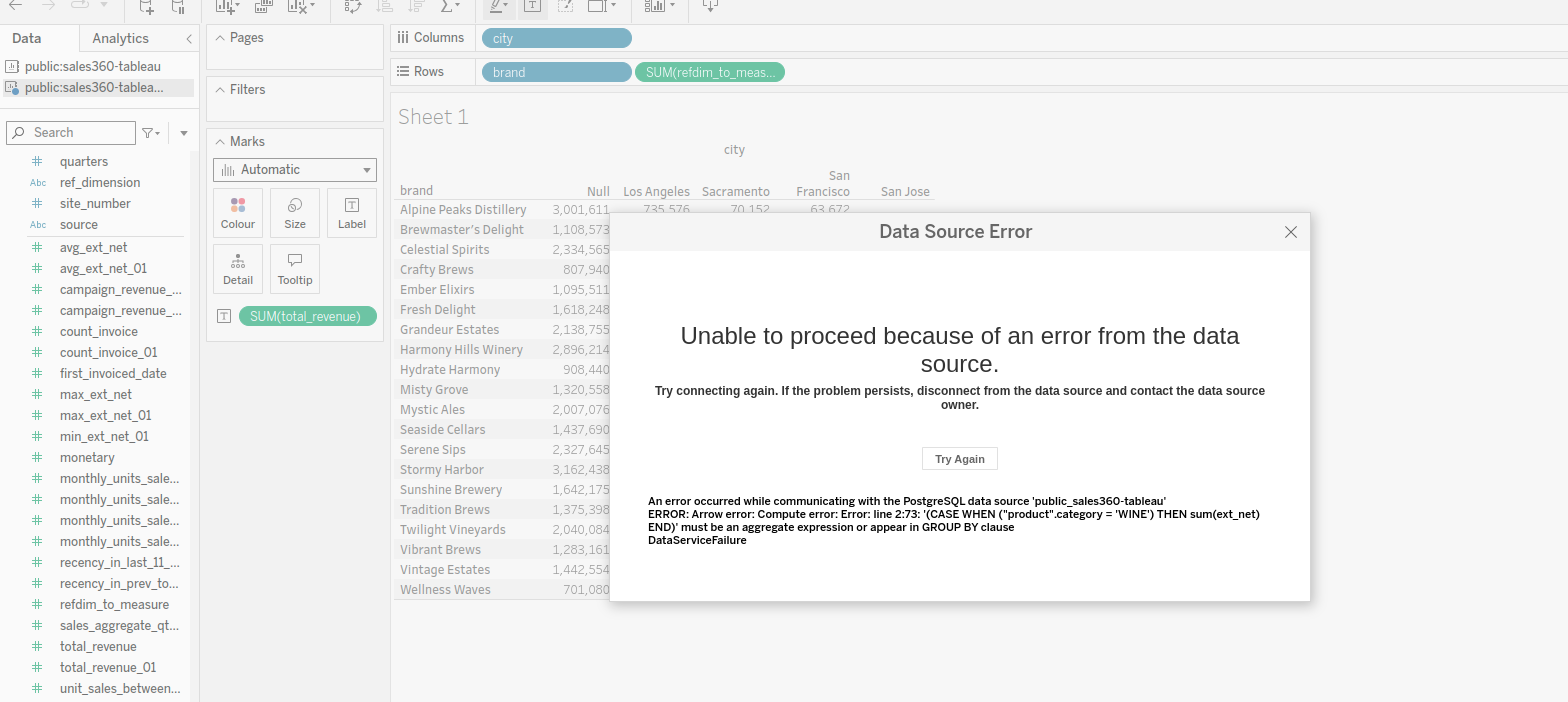

Scenario 1: Handling syntactical errors in measures or dimensions

If a measure or dimension contains a syntactical error (and is also not functioning in Lens Studio), the following error will appear when attempting to select such a measure or dimension:

After correcting the syntactical error in the measure or dimension within Lens, the error will no longer appear. To reflect the changes in Tableau, refreshing the data source and re-selecting the measure or dimension will be necessary to display it in the chart.

Scenario 2: Handling inactive Lens in the environment

If the Lens is not active in the environment while working on an existing workbook in Tableau or when attempting to establish a new connection, an error will be encountered. This may prevent access to or querying data from the Lens. Verification that the Lens exists and is active is required before syncing

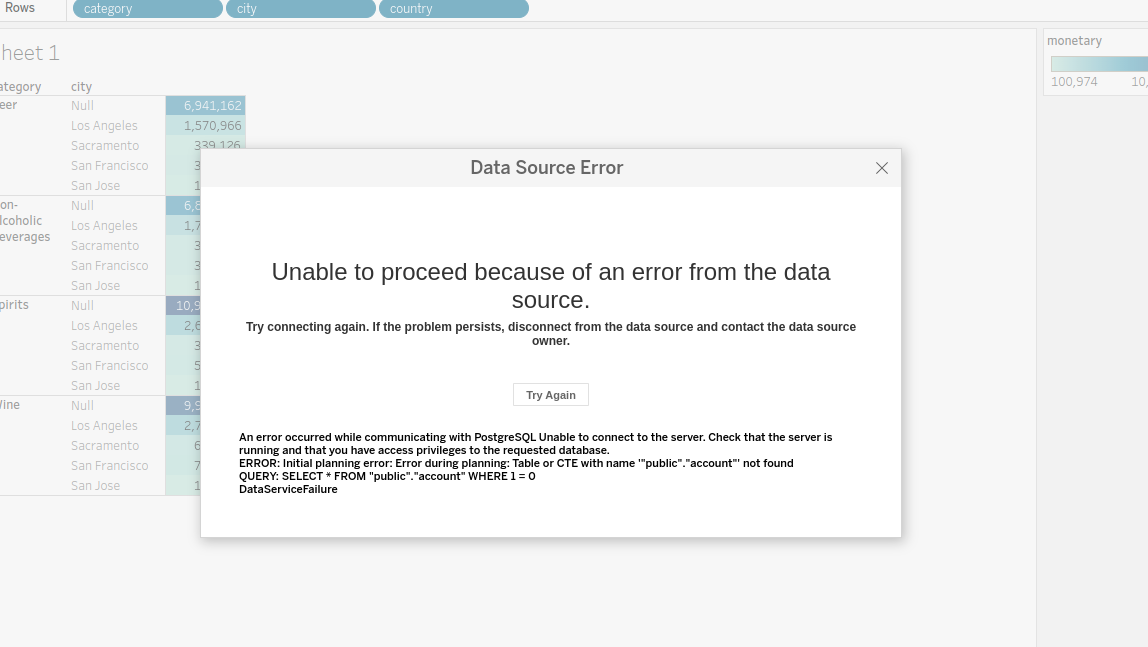

Scenario 3: Handling data source errors due to access restrictions

If the Account table is set to public = false, a data source error will occur in Tableau. The error message will indicate that the "Account table not found," which will prevent querying or using data from that table.

To resolve this issue, ensure the Account table is accessible (set to public = true or assign appropriate permissions) and then resync the Lens in Tableau to regain access.

Governance of Model on Tableau Desktop¶

When the semantic model is activated via BI Sync in Tableau, data masking, restrictions, and permissions set by the publisher are automatically applied, ensuring consistent data security and compliance. The behavior of these policies (e.g., masking) may vary based on the Tableau user.

The Tableau management process involves authentication and authorization using the DataOS user ID and API key when accessing synced data models. This ensures that columns redacted by Lens data policies are restricted based on the user's group permissions.

For example, if a user named iamgroot in the Analyst group is restricted from viewing the 'Annual Salary' column, this column will not be visible in either the Data Product exploration page or Tableau after syncing. Tableau Cloud requires the DataOS user ID and API key for authentication, ensuring that users can access the full model, except for any columns restricted by any data policies. This approach maintains security and guarantees that users only see the data they are authorized to view.