Data Product Developer Journey¶

Data Product Developers are responsible for creating and managing data products within DataOS. They design, build, and maintain the data infrastructure and pipelines, ensuring the data is accurate, reliable, and accessible.

A Data product developer is typically responsible for the following activities.

Understand Data Needs¶

-

Gather and understand the business requirements and objectives for the data pipeline.

-

Get the required access rights to build and run data movement workflows.

Understanding Data Assets¶

Data developers need to identify and evaluate the data sources to be integrated (databases, APIs, flat files, etc.) and determine each source's data formats, structures, and protocols. DataOS Metis can help you understand these details.

-

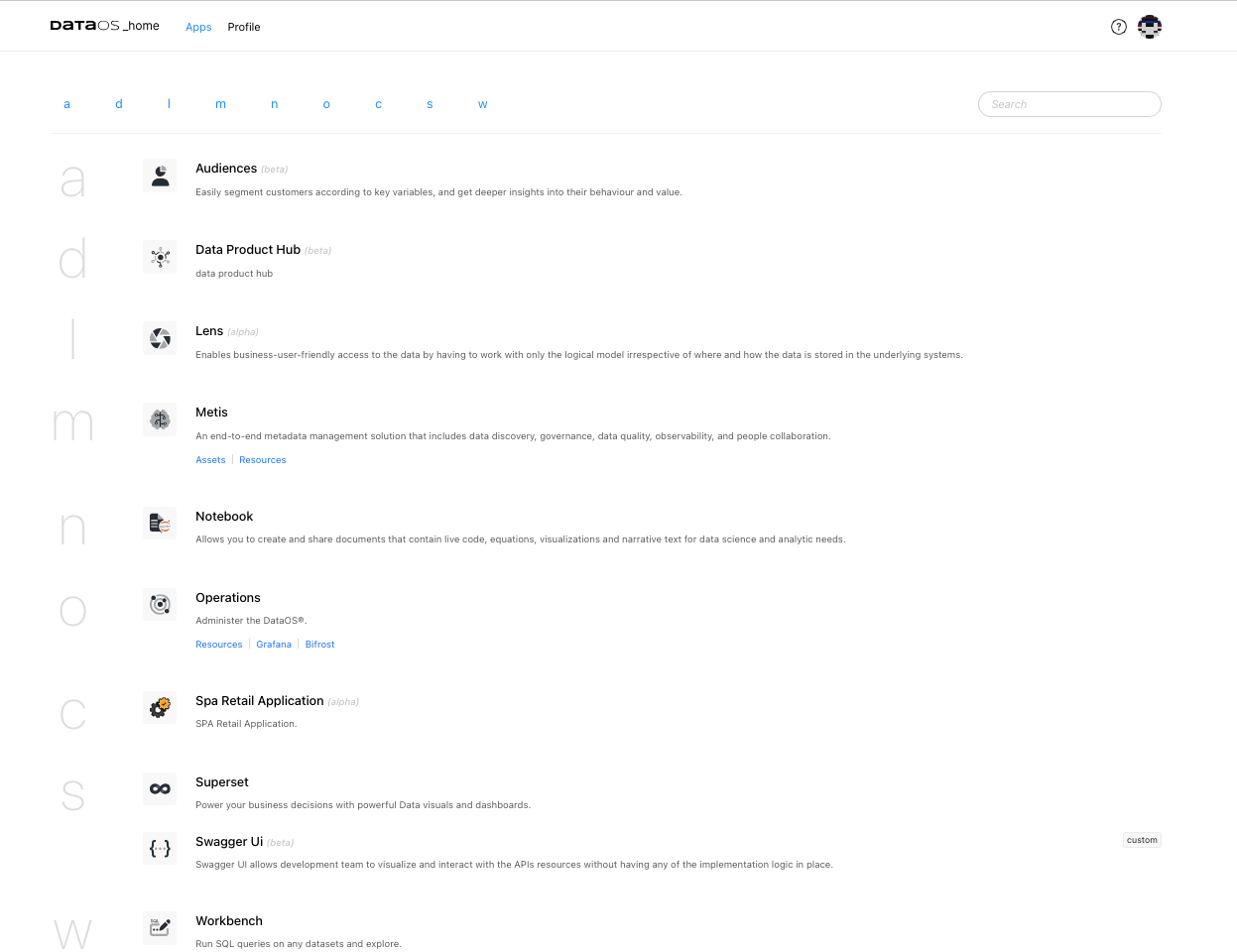

Login to DataOS and go to Metis app.

-

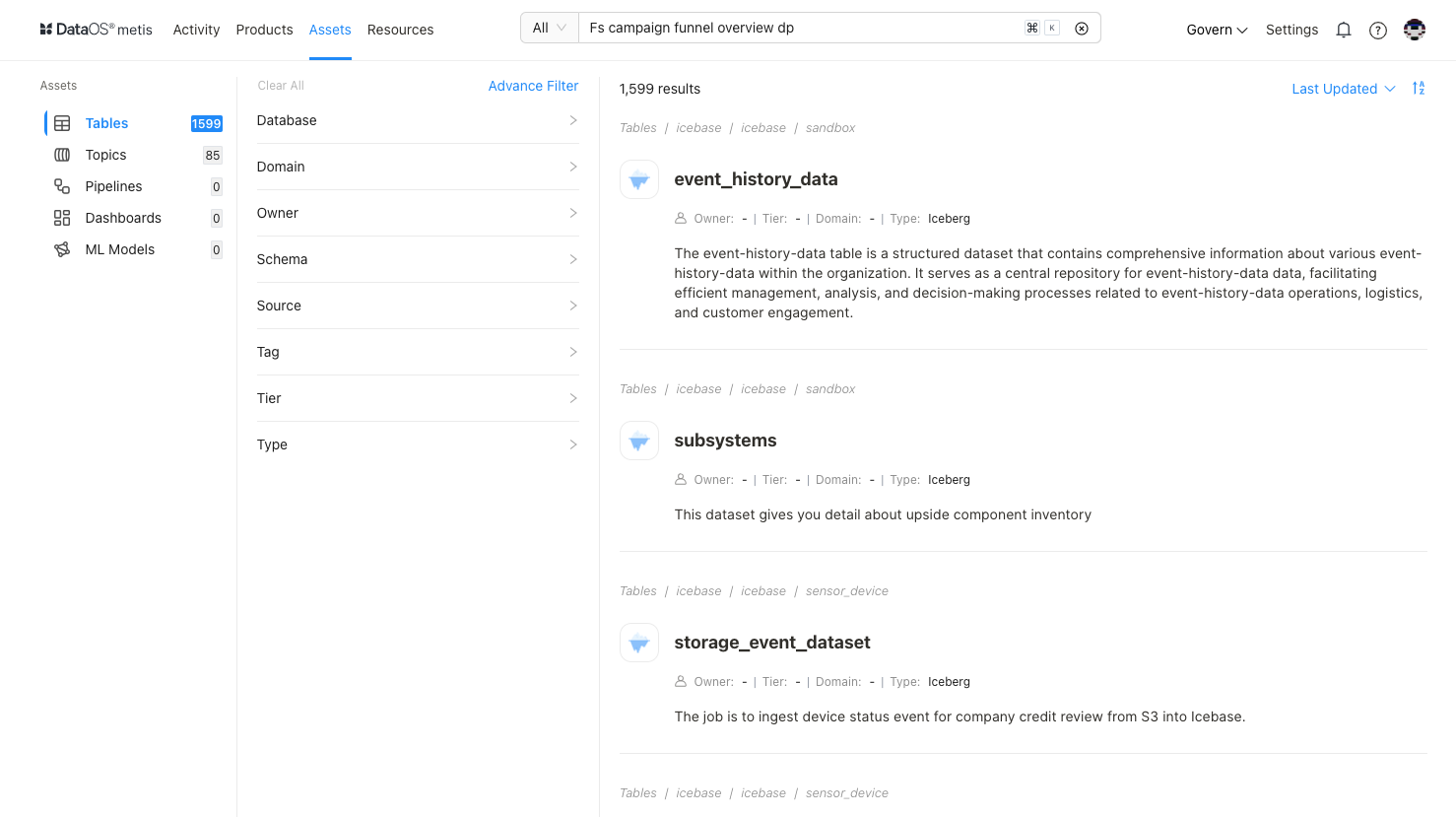

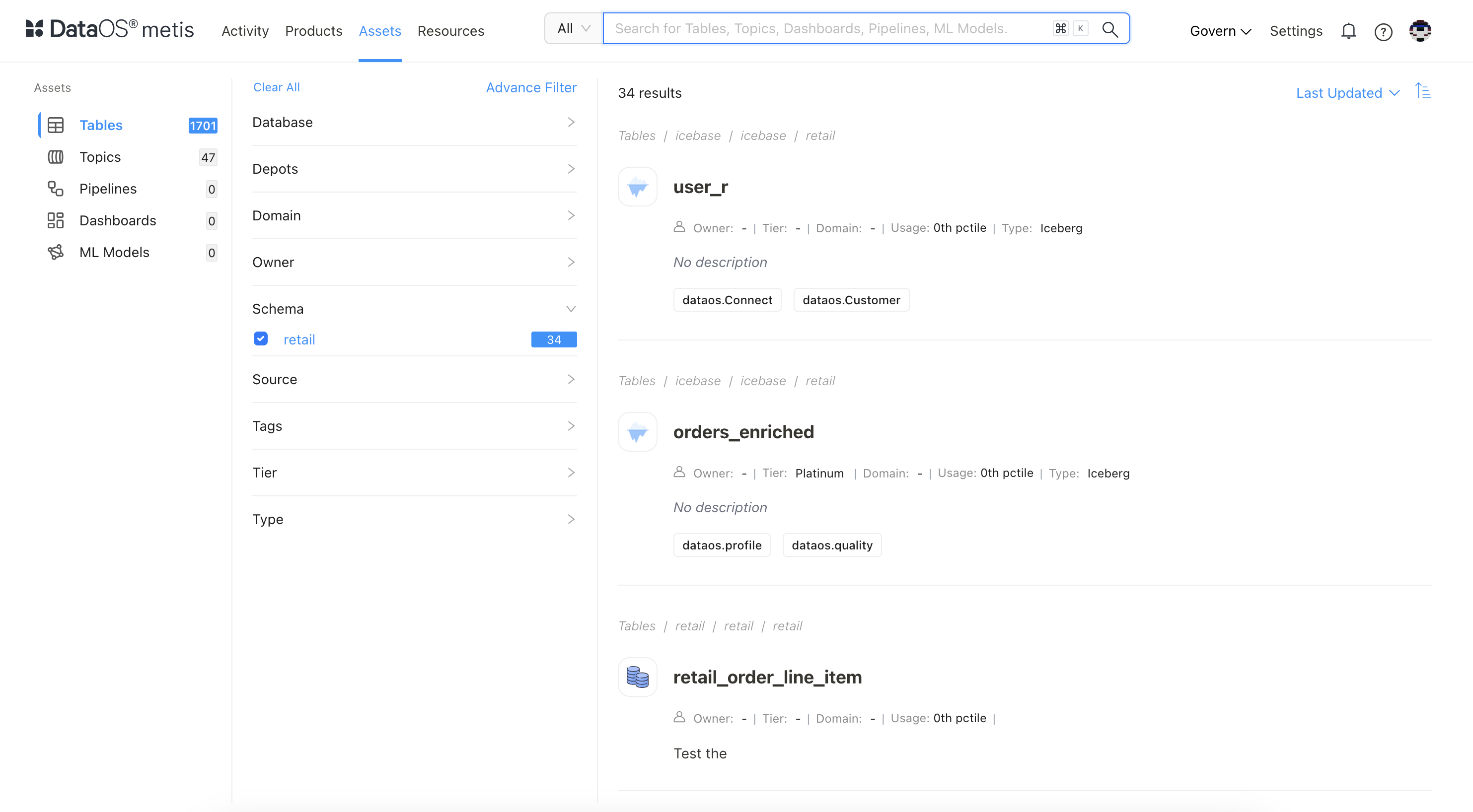

Go to Assets tab. Enter a search string to find your data asset quickly.

-

You can also apply filters to find your data asset.

-

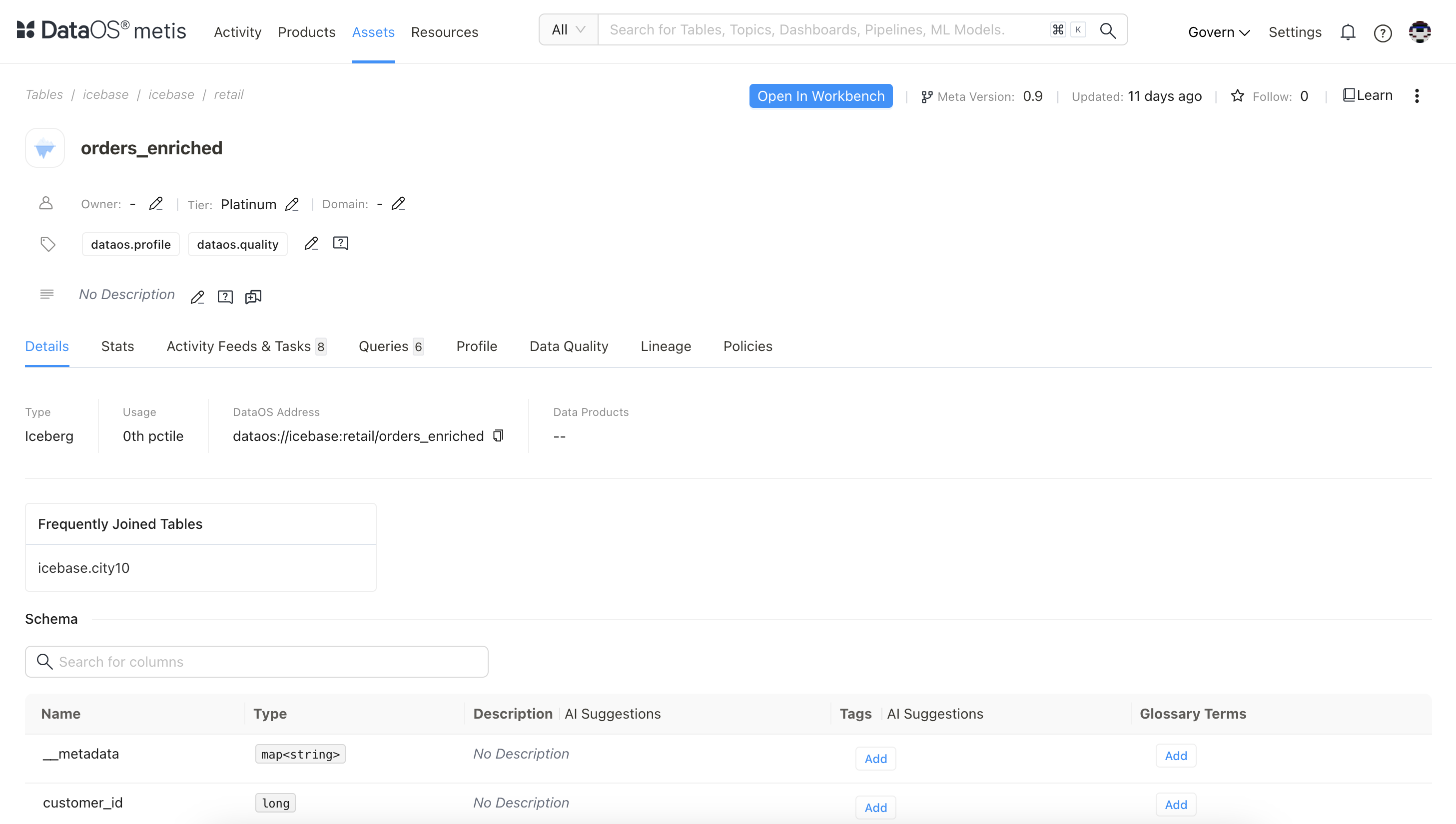

Click on the dataset for details such as schema and columns.

-

You can further explore the data asset by looking at its data and writing queries. Metis enables you to open the data asset in Workbench.

Performing Exploratory Data Analysis (EDA)¶

Exploratory Data Analysis is the initial examination of data that helps data product developers understand and make informed decisions. Before diving into building pipelines, or planning transformations, data exploration is crucial for understanding the dataset's features, data types, and distributions. Through queries, it identifies patterns, relationships, anomalies, and outliers within the data.

-

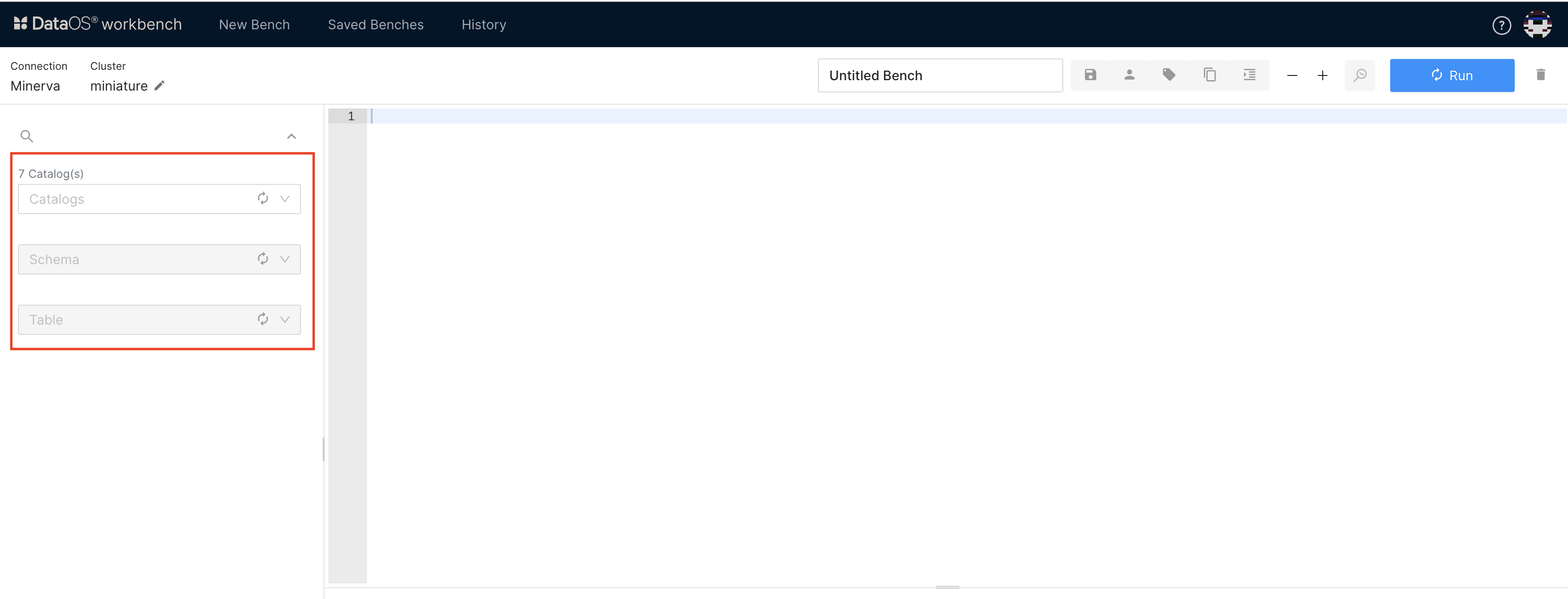

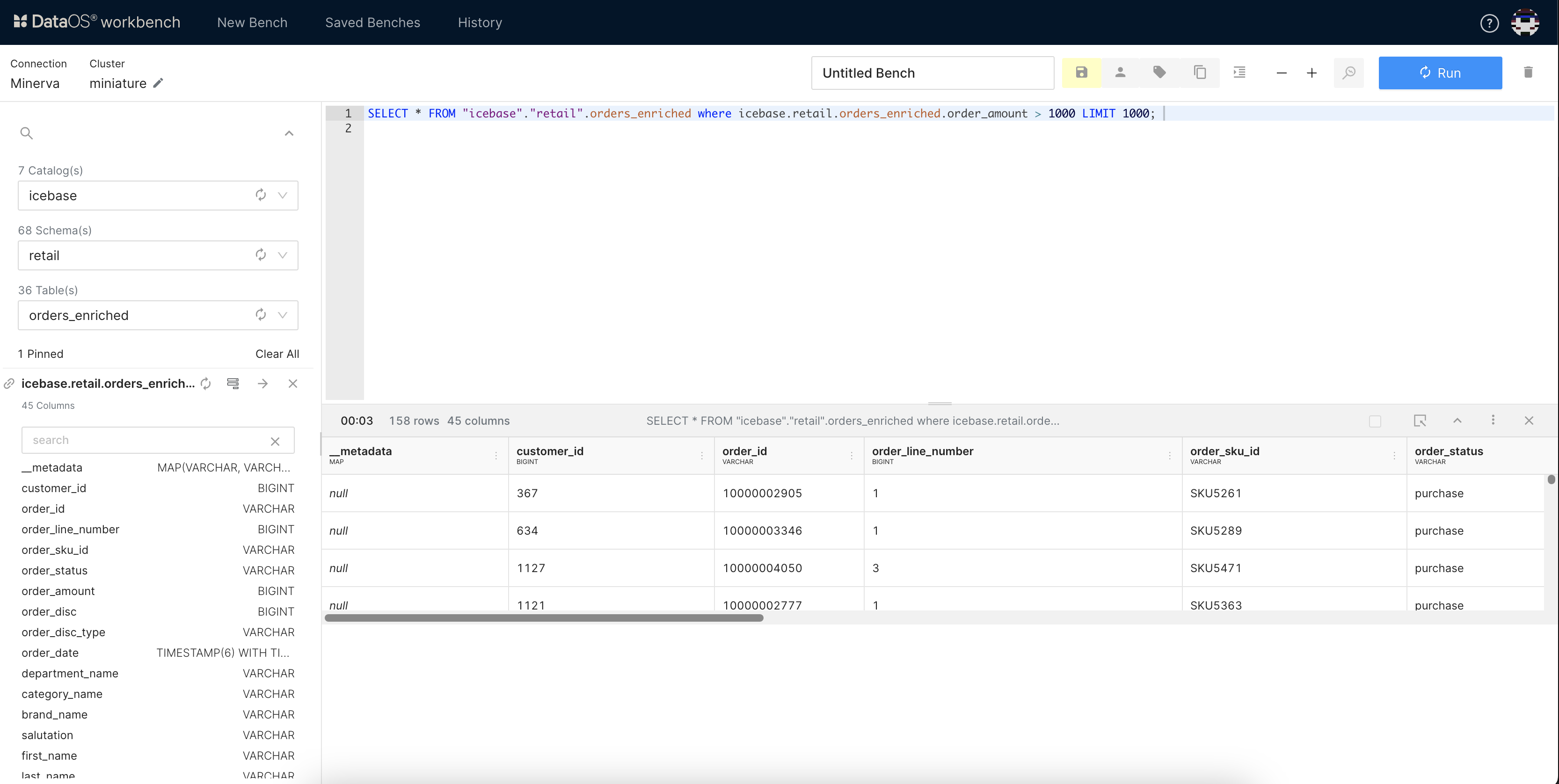

Open the data asset in the Workbench app.

-

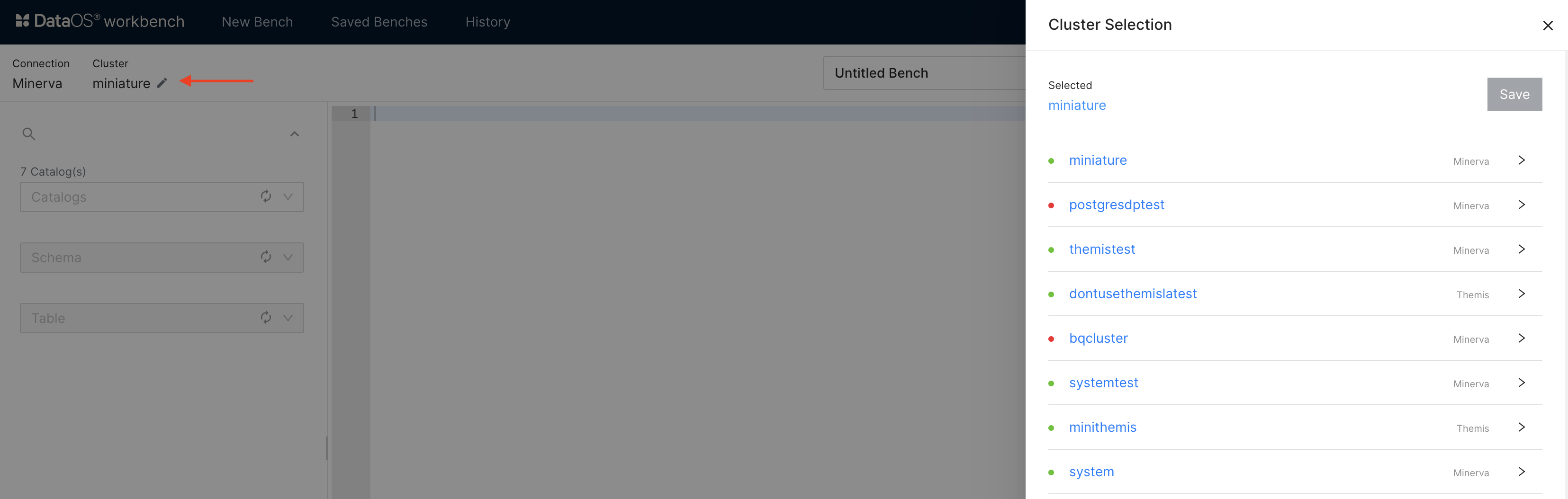

Select a Cluster to run your queries.

-

Select catalog, schema, and table.

-

Write and run queries.

Workbench also provides a Studio feature. Whether you're a seasoned SQL pro or just getting started, Studio's intuitive interface will help you craft powerful SQL statements with ease.

Define Data Architecture¶

-

Design the overall data architecture, including data flow, storage, and processing.

-

Develop a detailed plan outlining the transformation steps and processes for building the data pipeline.

-

Provision and configure the necessary infrastructure and computing resources.

Build Pipeline for Data Movement¶

-

Write YAML for the Data Processing Workflow as per the requirement. In the YAML, you need to specify input and output locations as depot addresses—contact the DataOS operator for this information.

-

Specify the DataOS processing Stack you are using for your Workflow.

The following example YAML code demonstrates the various sections of the Workflow.

version: v1

name: wf-sports-test-customer # Workflow name

type: workflow

tags:

- customer

description: Workflow to ingest sports_data customer csv

workflow:

title: customer csv

dag:

- name: sports-test-customer

title: sports_data Dag

description: This job ingests customer csv from Azure blob storage into lakehouse catalog

tags:

- customer

stack: flare:7.0

compute: runnable-default

stackSpec:

job:

explain: true

inputs:

- name: sports_data_customer

dataset: dataos://azureexternal01:sports_data/customers/

format: CSV

options:

inferSchema : true

logLevel: INFO

steps:

- sequence:

- name: customer # Reading columns from definition files.File names is used to create Survey Ids

sql: >

SELECT *

from sports_data_customer

functions:

- name: cleanse_column_names

# - name: change_column_case

# case: lower

- name: find_and_replace

column: annual_income

sedExpression: "s/[$]//g"

- name: find_and_replace

column: annual_income

sedExpression: "s/,//g"

- name: set_type

columns:

customer_key: int

annual_income: int

total_children: int

- name: any_date

column: birth_date

asColumn: birth_date

outputs:

- name: customer

dataset: dataos://lakehouse:sports/sample_customer?acl=rw

format: Iceberg

title: sports_data

description: this dataset contains customer csv from sports_data

tags:

- customer

options:

saveMode: overwrite

Run the Workflow¶

-

You need to run this Workflow by using the apply command on DataOS CLI.

-

Using DataOS CLI commands, you can check the runtime information of this Workflow.

-

Once completed, you can check the output data asset on Metis.

Please note that this workflow will be part of the data product bundle.

Click here, to access the comprehensive DataOS Resource specific documentation on dataos.info.

Explore the rich set of DataOS resources, which serve as the building blocks for data products and processing stacks. Additionally, refer to the learning assets to master the required proficiency level for the role of data product developer.