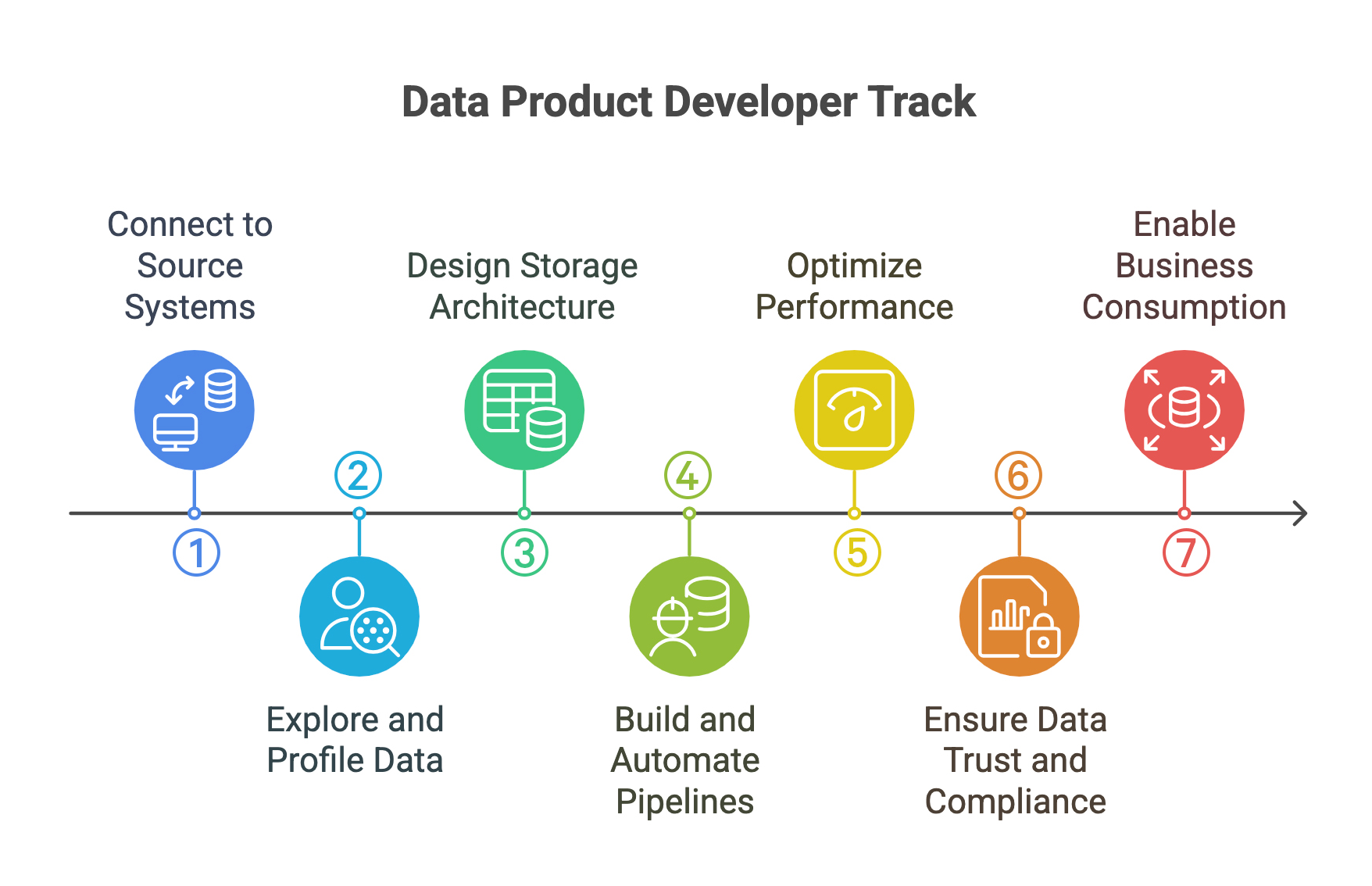

Data Product Developer Track¶

Overview

With a strong emphasis on aligning technical execution with business objectives, this track ensures learners can confidently translate requirements into reliable, governed data products that drive value across the organization.

Data Product Developers play a key role in creating, managing, and evolving Data Products within DataOS. They are responsible for building the data infrastructure that powers everything from analytics to business intelligence, making sure data flows smoothly through pipelines and stays accurate and accessible for users.

Who is this track for?¶

| Persona | Why It Matters | Level |

|---|---|---|

| Data Engineers / Developers | Build and manage pipelines and workflows in DataOS. Gain end-to-end expertise in creating scalable, governed Data Products. | Must-have |

| DevOps Engineers / DataOS Admins | Ensure performance, deployment, and platform reliability. Learn how to monitor, deploy, and operate data pipelines effectively in production. | Must-have |

| App Developers | Use data products to build apps or services. Understand how to enable consumption patterns via APIs and semantic models. | Must-have |

| Technical Leads / Architects | Guide data architecture and design decisions. Align technical choices with DataOS capabilities and governance policies. | Recommended |

| Data Product Owners | Oversee delivery and success of Data Products. Learn the technical depth needed to work closely with development teams and shape product vision. | Recommended |

What you’ll learn¶

As a Data Products Developer, you'll master how to:

-

Connect and Ingest Data Securely: Integrate APIs, SaaS, databases, and file systems using Secrets and Depots, and ingest data with governed workflows.

-

Design and Build Governed Architecture: Architect layered storage (raw, curated, semantic) while ensuring lineage, access control, and compliance.

-

Develop and Automate Scalable Pipelines: Build robust pipelines using Flare, or Bento with scheduling, triggers, and error handling.

-

Ensure Data Quality and Trust: Apply validation, profiling, masking, and audit controls to maintain clean, compliant, and reliable data.

-

Enable Consumption Through APIs and Models: Publish semantic models and APIs via Lens, Talos, and other tools to serve analysts, apps, and business users.

📚 Core modules¶

The learning track walks you through each stage of the data product lifecycle with hands-on modules and best practices—helping you build and manage production-ready Data Products in DataOS, ensuring a comprehensive and practical learning experience.

📘 Module overview¶

| No. | Module | Objective | Topics Covered |

|---|---|---|---|

| 1 | Connect to and Understand Source Systems | Securely connect to APIs, databases, file systems, and more—without unnecessary data movement. | Types of sources, secrets & depots, schema exploration, metadata scanning |

| 2 | Explore and Profile Ingested Data | Use Workbench and Metis to understand data quality and structure. | Scanner workflows, profiling, null/type/range checks, storage vs query access |

| 3 | Design Governed Storage Architecture | Architect a layered storage strategy while enforcing compliance and traceability. | Raw/curated zones, schema evolution, data tagging, retention strategies |

| 4 | Build and Automate Pipelines | Create robust, fault-tolerant pipelines using Flare, DBT, or Bento. | Workflow DAGs, scheduling, event triggers, retries, stream/batch handling, monitoring |

| 5 | Optimize for Performance and Scalability | Tune data and workflows to handle scale efficiently. | Partitioning, parallelism, compute monitoring, cost-performance trade-offs |

| 6 | Ensure Data Trust and Compliance | Integrate profiling, quality checks, and data masking. | Soda stack, validation logic, PII masking, policy enforcement (GDPR, HIPAA, etc.) |

| 7 | Serve and Enable Business Consumption | Publish data for downstream users via APIs, notebooks, and BI tools. | Semantic models (Lens), APIs (Talos, Lakesearch), access control, DPH registration |

✅ Start learning¶

Ready to Dive In?